From 2016 to 2026: How Intelligent Ecosystems Are Redefining Assistive Technology

In 2016, assistive technology for blind and visually impaired people reached a pivotal turning point.

That year marked the shift from single-purpose accessibility tools toward AI-powered, sensor-driven, and wearable systems designed to support real-world independence at scale.

A decade later, in 2026, the opportunity is no longer about building better standalone tools—it’s about building cohesive assistive ecosystems that reduce cognitive load, improve predictability, and integrate seamlessly into daily workflows.

For investors, partners, and innovators, this represents a platform moment in assistive technology.

2016: The Inflection Point That Set Today’s Market in Motion

Before 2016, most solutions revolved around:

- Screen readers

- Standalone OCR hardware

- GPS navigation apps

- Traditional white cane mobility

These tools worked—but they were fragmented. Users had to switch devices, apps, and mental models throughout the day, increasing friction and fatigue.

Beginning in 2016, several enduring shifts reshaped the trajectory of assistive innovation.

Trend 1: AI Shifted Assistive Tech From “Access” to “Understanding”

Advances in computer vision and cloud AI made it possible to move beyond text reading into scene interpretation and contextual awareness.

This laid the foundation for:

- Real-time object and hazard detection

- Conversational scene description

- AI-driven environmental interpretation

Assistive technology began transitioning from functional accessibility to intelligent perception.

Trend 2: Wearables Introduced Persistent, Ambient Awareness

2016 also marked the rise of body-worn sensors and mobility-focused wearables.

Early innovations explored:

- Head- and chest-level obstacle detection

- Orientation tracking

- Haptic and spatial feedback

This initiated a long-term shift toward continuous environmental awareness, rather than stop-and-scan interactions.

Trend 3: Mobility Expanded Beyond GPS Into Spatial Computing

Developers recognized that GPS alone could not solve indoor navigation, last-meter accuracy, or dynamic urban mobility.

This drove growth in:

- Indoor mapping

- Sensor fusion (IMUs, vision, depth)

- Spatial audio navigation

- Hybrid mobility intelligence

These capabilities now underpin next-generation mobility platforms like DirectMe.

Trend 4: Cognitive Load Became a Strategic Design Priority

By 2016, a critical insight emerged:

Users don’t just need more features—they need fewer decisions.

Long-term adoption began favoring solutions that delivered:

- Predictability over novelty

- Seamless workflows over feature overload

- Reduced mental effort over experimental capability

This shift now defines best-in-class assistive product strategy.

Trend 5: Smart Glasses Emerged as a Promising—But Evolving—Interface

Smart glasses gained early traction as a potential hands-free platform for spatial computing and environmental awareness.

Their promise included:

- Head-aligned sensing

- Hands-free interaction

- Faster access to environmental context

- More natural integration with mobility behaviors

However, market maturation—hardware readiness, usability, social acceptance, battery life, and reliability—has required measured, disciplined evaluation rather than premature product commitment.

2025–2026: The Market Is Shifting From Features to Systems

Across blind and low-vision users, clinicians, and accessibility leaders, several clear adoption patterns are emerging:

From Features to Workflows

Users increasingly value how technology fits into daily routines, not how many features it offers.

On-Device Processing

Demand is rising for faster response times, offline capability, privacy, and lower cloud dependence.

Predictability Over Novelty

Products that behave consistently and reliably outperform those that prioritize experimental features.

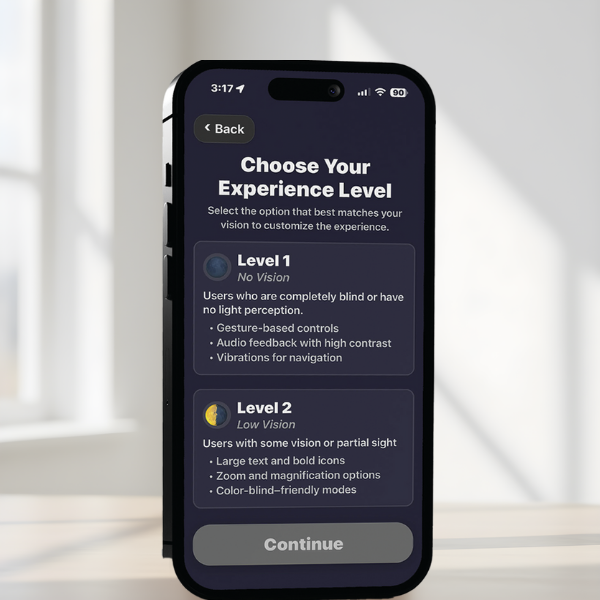

Decision Fatigue Is the Real Bottleneck

The most valuable solutions are those that reduce the number of choices users must make throughout the day.

What This Means Strategically for Assistive Technology

- The future is ecosystems, not standalone tools

- Smartphones remain central to reading, identification, and communication

- Mobility tools augment—but do not replace—foundational cane and O&M skills

- Indoor navigation remains an active innovation frontier

- Cognitive load reduction is now a competitive advantage, not just a UX goal

This is a platform opportunity—not a single-product race.

GoVivid’s Strategy: Build the Intelligence Layer, Not Just the Device

GoVivid is designed around a single strategic principle:

GV Discover: The AI Perception Foundation

GV Discover serves as the core environmental intelligence layer, supporting:

- Text reading

- Object identification

- Scene interpretation

- On-demand situational awareness

Its focus is not more features—it’s faster understanding with less friction.

GV Discover App

Direct Me

DirectMe: Mobility as an Integrated System

DirectMe evolves wearable mobility from isolated tools into a coordinated spatial awareness platform.

Iris — Head-Worn Orientation Sensor

Enables 3D spatial audio cues aligned with head movement, helping users interpret walls, doorways, and nearby people with natural awareness.

Seymour — Cane-Mounted Detection Device

Attaches to a white cane to detect:

- Objects

- Obstacles

- Elevation changes

- Overhead hazards

- Room-level spatial cues

This approach enhances—rather than replaces—traditional mobility skills, reinforcing adoption and trust.

Smart Glasses: A Strategic Feasibility Track, Not a Single Bet

In 2026, the way we all interact with AI and associated hardware interfaces will rapidly evolve. GoVivid is actively investigating the way smart glasses, may be a critical new technology that allows us to deliver the benefits of the GoVivid ecosystem to an expanded set of BVI users.

Rather than committing prematurely to a single form factor, GoVivid is evaluating:

- Smart glasses

- Wearables

- Phone-centric workflows

- Sensor-based mobility hardware

The objective is platform flexibility—ensuring GoVivid can deploy intelligence across the most reliable, usable, and scalable interfaces as the market evolves.

Smart glasses represent a promising pathway, not the only pathway.

Key Takeaways

- Assistive technology has moved from single-purpose tools to coordinated systems that support daily movement

- AI and sensors are most valuable when they reduce cognitive load, not add complexity

- Wearables and smart devices succeed when they complement existing mobility skills

- Predictability and reliability now matter more than experimental features

- The white cane remains the foundation. Technology works best when it builds on that trust

- The next phase of innovation focuses on awareness, orientation, and smoother transitions between moments

The opportunity ahead is not about replacing how blind and visually impaired people move.

It is about making that movement more predictable, informed, and safe.